FLgym: Towards Robust and Byzantine-Resilient Federated Learning

Abstract / Description

Abstract

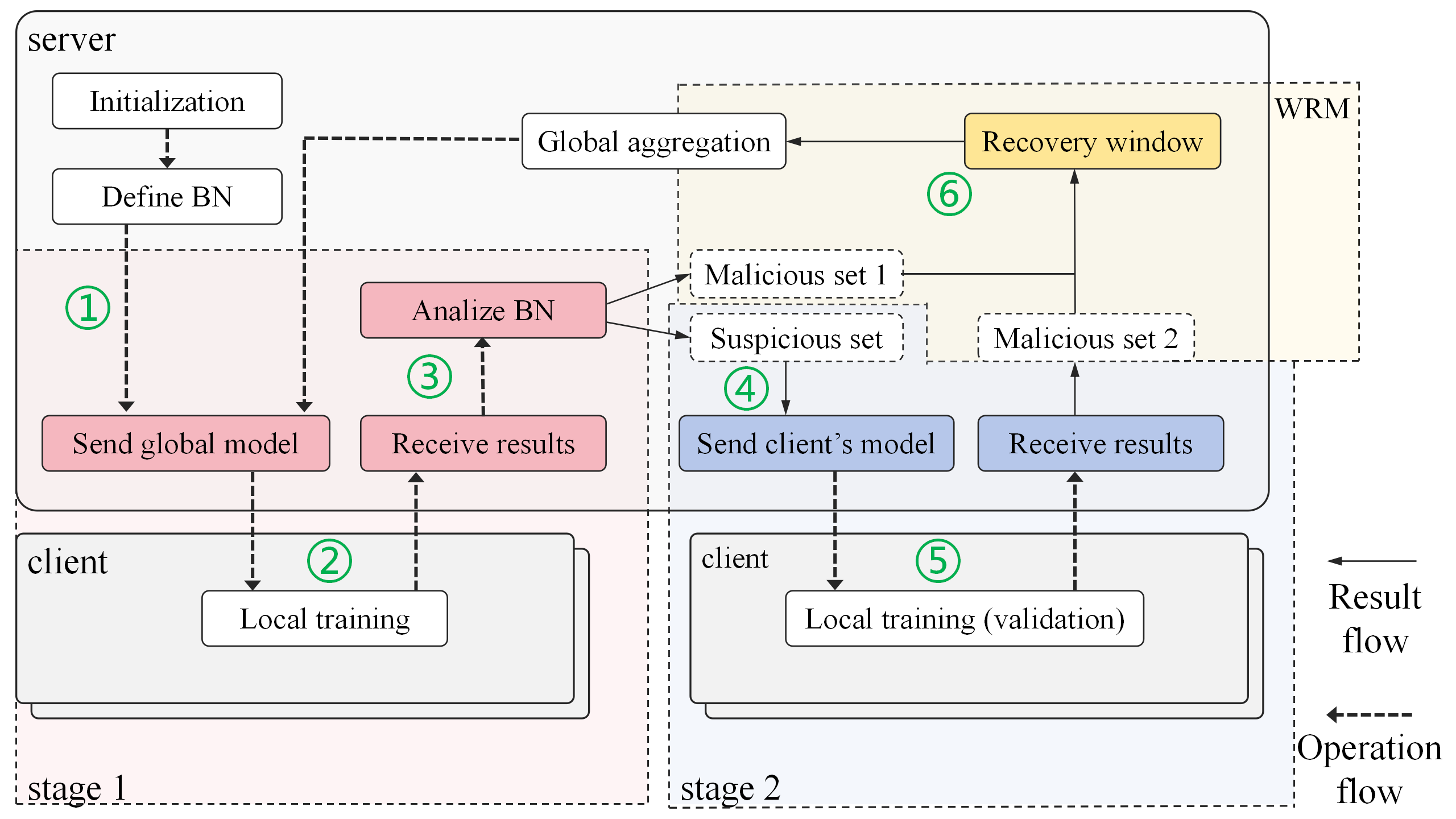

Federated learning (FL) has emerged as a popular paradigm for collaborative model training across decentralised data clients while preserving data privacy. However, FL is inherently vulnerable to poisoning attacks. As these attacks grow more sophisticated, various defence mechanisms are proposed to mitigate the threats. Most existing defences adopt a single perspective on Byzantine client detection resulting in both false positives and false negatives. We propose FLgym, a two-stage framework for Byzantine-resilient FL that integrates three components: a model similarity-based detection mechanism, a validation mechanism based on similarity estimation of clients’ local data, and a weight recovery mechanism for identified Byzantine clients. Extensive experiments show that FLgym consistently outperforms state-of-the-art baselines achieving the highest model accuracy, true positive rate of 90.95%, and the lowest false positive rate of 6.3%.

Illustration